Progress Blog |

| 23. Sep 2008 Added support for loading jsgf files with rules that can be imported to other grammars. A grammar specifying numbers is the first I added and at the same time added a general calculation module where you can ask it to e.g. "Add 345 to 129" and other calculations. The same number grammar was used in the currency behaviour so that it can calculate any uttered number to the local currency. I have also added a moon phase grammar where you can ask it about the current moon phase, or next full/new moon. Finally a sun rise/set grammar was added so you can ask when the sun rises or sets today or tomorrow. |

| 22. Sep 2008 Finally got JCP1 up and running again, well at least the OS, drivers and some software is installed by now. Also did a quick test and got my software running there as well although with no devices active. I see that I have to rip out the annoying little cpu fan on this motherboard, its absolutely terribly noisy spinning at 4000 rpm! My hopes are either to find a bigger copper heatsink so that the thing can be passively cooled, or just mount a low speed 12cm case fan above it instead. The dvd drive is fairly noisy as well when it is spinning so I think the robot might pick up that noise as well when it is spinning at full speed. Hopefully its somewhat muffled when it is mounted correctly inside the robot. The battery lasted for approximately 2.5 hours, but I think the battery was not fully charged since it had been unused for quite some time. I guess it needs some rounds of recharging to get it back up again. Hopefully I should be able to run the robot for 3 hours on one charge. |

19. Sep 2008 Lately I have been working on refining some of the robot software engine and added some enhancements that allows me to dynamically add and update expansion tokens, for example lists of names. An example use for this is that the robot will ask the server connected which music artists it can play and these will update the grammar dynamically so that you can e.g. say "Play a song by Peter Gabriel". I have also polished a bit on the user interface (that is only visible if you connect to it using VNC) and added two modules for the Behaviours and Sensors. Added some more configuration as well so that you can turn on/off behaviours and this is saved. A fun new feature is that JCP1 has its own email address now where I can send a list of commands to it. Been playing with a little Family behaviour where you can tell it the names of your father, mother, brother, kids, wife, etc and it will create a family tree structure where it can ask you about missing names, etc. I will also add the ability to say when each person was born as well so you are reminded of their birthday. I also added some grammars for asking about day and date. I plan to add more calendar grammars in the future. Finally I have just received the package with the slim slot-in dvd as well as the necessary cables to get JCP1 up and running again. I need to get a Dremel tool also because I need to cut some holes in the aluminium plating now for the DVD drive and I want to get the power button on the outside. Hopefully during the weekend it should be rolling again. :) Lately I have been working on refining some of the robot software engine and added some enhancements that allows me to dynamically add and update expansion tokens, for example lists of names. An example use for this is that the robot will ask the server connected which music artists it can play and these will update the grammar dynamically so that you can e.g. say "Play a song by Peter Gabriel". I have also polished a bit on the user interface (that is only visible if you connect to it using VNC) and added two modules for the Behaviours and Sensors. Added some more configuration as well so that you can turn on/off behaviours and this is saved. A fun new feature is that JCP1 has its own email address now where I can send a list of commands to it. Been playing with a little Family behaviour where you can tell it the names of your father, mother, brother, kids, wife, etc and it will create a family tree structure where it can ask you about missing names, etc. I will also add the ability to say when each person was born as well so you are reminded of their birthday. I also added some grammars for asking about day and date. I plan to add more calendar grammars in the future. Finally I have just received the package with the slim slot-in dvd as well as the necessary cables to get JCP1 up and running again. I need to get a Dremel tool also because I need to cut some holes in the aluminium plating now for the DVD drive and I want to get the power button on the outside. Hopefully during the weekend it should be rolling again. :) |

| 10. Sep 2008 The last days I have been adding DVD and CD ripping behaviours. When you insert a DVD it will ask if it should make a backup of it on your servers. It uses AnyDVD for this. The only problem I have bumped into is the name of the movie. There is no description file on the DVDs indicating what its title is, and often the volume label of the disc is cryptic, and sometimes the label is just DVD! I really wanted the robot to look up information about the movie on imdb the moment you popped it in the drive so you could ask questions about it. I will probably have the robot ask you which movie it is if there isnt enough information on the volume label to figure it out. I also added Music CD backup so it can also store your music CDs on the server. I will further experiment with playback on a remote server so you can essentially use the robot as a remote cdplayer on wheels and have the sound come out of your stereo. :) In addition to this I have added remote server picture viewing and it can do image searches on google images and show them in a slideshow. I will add support for user pictures where the directory name in your photo library is used as the identifier when you ask it about showing pictures of certain things or people. For any of these remote server tasks I will add some grammars so that you can configure the behaviour of the robot in such a way that it will always e.g. play music on your livingroom server. At this point JCP1 only knows about one server but the general idea is that any remote tasks can be directed to whichever display you want it to use, and in the future have an automatic mode where it knows which room it is in and uses the display in that room. |

| 9. Sep 2008 Decided to stick with the D201GLY2 board I use now because the new Atom board from Intel consumes around the same amount of power because of its hungry chipset. Ordered the correct cables so I can get the D201GLY2 up and running, and also ordered a slim slot in DVD drive for DVD and Music ripping/playback. |

| 8. Sep 2008 I think I have found the best board for a pc based robot now. The Kontron KTUS15/mITX - 1.6 uses the new lowpower Z530 atom processor as well as the new US15W chipset, together they consume less than 5 watts! A complete board would probably use a bit more, and I am waiting for a reply from the company about real power usage when idle/load. But it does seem like this whole thing consumes less than 10 watts, which makes it absolutely brilliant for a robot. The benchmarks of the Z530 shows its about the same as the other Atom processors and should be more than enough to process both speech recognition and webcamera stream. |

| 8. Sep 2008 Been going back and forth trying to decide if I should get an Atom board, but it seems the D945GCLF from Intel consumes around the same amount of power compared to the D201GLY2, but performing worse on integer operations. The only advantage is more USB ports and the ability to plug the M3 Pico Psu directly in it (really silly placement of the power connector on the D201GLY2). They both require active cooling, the D201GLY2 on the CPU and the D945GCLF on the chipset (which consumes a lot of power). The new Dell Atom based mini laptop is the first to have 100% passive cooling so that one might be a good robotic platform also, but I think I will wait for the next generation of low power boards before I do any upgrade again, after all the D201GLY2 performs very well as it is now. With the new distributed server processes I can also use several other computers for processing vision as well, so CPU power on the robot is of less concern. But power usage is. My 10 Ah SLA battery would probably be dead within 3 hours with the current power consuptions, and I'd like the whole robot to at least run for 6 hours between recharges. Since its only a 10Ah battery I can only charge it at 800mAh on my charger which can take 10 hours to charge. Some day I might kit the robot with nimh or li-ion packs which will provide much longer runtime per kg. |

5. Sep 2008 Just ordered the Optimus Mini Three 2.0, a very cool "gadget" by Art Lebedev. It consist of 3 OLED screens which are also buttons. This will be mounted on the chest of the robot and serve as status feedback as well as a simple physical user interface (nice for resetting modes, running special programs, etc). I also ordered a 12v led light for the interior which is nice when working the robots internals. And I found some nice acrylic cubes with screw holes that will be so much better for assembling the aluminium frame. They also had adhesive rubber edge trimmings that will hide the sharp edges and double as aestetically pleasing lines. I also found a cool power button with the led integrated, as well as a 3.5" memory card reader and usb port that will be on the front (I will add behaviours that ask if you want to copy the memory cards to the server when inserted). The only thing missing now is the Atom based board and a slim slotin dvd drive. Hope to order those once I have figured out if an P4 connector wire is needed or supplied. Just ordered the Optimus Mini Three 2.0, a very cool "gadget" by Art Lebedev. It consist of 3 OLED screens which are also buttons. This will be mounted on the chest of the robot and serve as status feedback as well as a simple physical user interface (nice for resetting modes, running special programs, etc). I also ordered a 12v led light for the interior which is nice when working the robots internals. And I found some nice acrylic cubes with screw holes that will be so much better for assembling the aluminium frame. They also had adhesive rubber edge trimmings that will hide the sharp edges and double as aestetically pleasing lines. I also found a cool power button with the led integrated, as well as a 3.5" memory card reader and usb port that will be on the front (I will add behaviours that ask if you want to copy the memory cards to the server when inserted). The only thing missing now is the Atom based board and a slim slotin dvd drive. Hope to order those once I have figured out if an P4 connector wire is needed or supplied. |

| 4. Sep 2008 I have spent the last days also adding support for the DICTATION grammar keyword. With that I mean, I can add DICTATION anywhere to get any word which is then used as the tag in the result set. This enabled me to add a new InternetLookup behaviour with grammars for "Define XXX" and "What is a XXX". It will use answers.com and wikipedia to get definitions and descriptions of the word you uttered and say that out loud. It works very well and it was quite fun to learn definitions of word I didnt exactly know the meaning of. But of course the recognition can often pick up the wrong word since there are many similar sounding words. This is the problem with all non-grammar recognition. The only way it can be improved is by providing the system an extra clue about what you are asking about. For example you could say "What is the color blue" and I could limit the dictated word (blue) to colors so it doesnt think you said glue or flu or something alike. This requires a lot of work though to identify categorisation, but I could also use them as search words and only match the articles that also has the keyword (the word "color" is likely to be found more in the article about "blue" than "glue"). This requires it to look at all word guesses and look them up all. |

| 3. Sep 2008 Started coding again and it seems JMF will not work on Vista so I plan to switch to another Java API wrapper using DirectShow directly. Anyway, I have created the outline of the distributed server processes I have planned. When you fire up JCP1 it will broadcast an UDP "ping" on the subnet announcing itself. The robot runs an RMI registry that any server can add their RMI services to for the robot to use. When the robot is shut down, the server process go back to listening for robot "pings" again. My first service API and implementation offers the ability for the robot to display a fullscreen message, show an image (passed or loaded on server), start/stop music and start external programs. This is quite powerful because the robot can then start a remote RoboRealm server which it uses for vision processing whenever it needs it. It can also display information you ask it on a monitor/tv in front of the user. Each server will register its service object with a name indicating where it is located and if it has a screen (some can be headless). The robot can then use the display that is closes to its current location. An example, if I am sitting in my livingroom I can ask the robot to show me some pictures of my kids and the robot will turn on the tv, switch to media pc and run a full screen slideshow of pictures stored on the media pc or the robot. I can ask it to play some music by Sting and it will generate a playlist that is used in winamp (there is a nice commandline tool for operating winamp from another process). I will also add playback of movies although I need some good way of controlling the media player by commandline for that to work (or I could add a general media player based on a DirectShow wrapper). The idea is for the robot to use all available computing resources through this interface so that it can also distribute its processing requirements, especially when multiple vision algorithms are needed. Although that might require me to echo the image data between the servers since those are running on a gigabit ethernet which is very fast compared to the wireless connection the robot is using. |

27. Aug 2008 Asus has finally released their desktop version of the EEE pc, called EEE Box, and it looks to be an excellent robotics platform! The big advantage over the new D945GCLF board is that it uses the mobile chipset, 945GSE+ICH7M! Web sites quote that the whole thing consumes around 20 watts idle and peaks to 35 watts during load. The question then is how easy it is to power this thing from a battery. Unfortunately, the PSU is a 19Vdc, 4.74A, 65W power adaptor which means you cannot power this using a 12 volt battery, probably you have to check if the power board can be swapped out somehow. Anyway its exciting times indeed, and on top of that you can get a cheap SSD drive these days so OS/sw is run off a completely silent and fast "medium" as well as consuming less power than a normal drive. Btw, the Asus Eee Box measures 17.7 cm x 22.1 cm x 2.7 cm which makes it an excellent size for a robot of my design. If you take it apart I am quite sure you can shave off a cm or two there too. I am really looking forward to see some pictures of the internals. In any way you have a complete platform in this one, memory, drive, os and all for only $299! Asus has finally released their desktop version of the EEE pc, called EEE Box, and it looks to be an excellent robotics platform! The big advantage over the new D945GCLF board is that it uses the mobile chipset, 945GSE+ICH7M! Web sites quote that the whole thing consumes around 20 watts idle and peaks to 35 watts during load. The question then is how easy it is to power this thing from a battery. Unfortunately, the PSU is a 19Vdc, 4.74A, 65W power adaptor which means you cannot power this using a 12 volt battery, probably you have to check if the power board can be swapped out somehow. Anyway its exciting times indeed, and on top of that you can get a cheap SSD drive these days so OS/sw is run off a completely silent and fast "medium" as well as consuming less power than a normal drive. Btw, the Asus Eee Box measures 17.7 cm x 22.1 cm x 2.7 cm which makes it an excellent size for a robot of my design. If you take it apart I am quite sure you can shave off a cm or two there too. I am really looking forward to see some pictures of the internals. In any way you have a complete platform in this one, memory, drive, os and all for only $299! |

27. Aug 2008 A new low power mini itx style board is out, the D945GCLF Intel board with an Atom 230 processor running at 1.6 GHz. The initial reviews can show a benchmark of Dhrystone ALU : 3889 MIPS and Whetstone iSSE3 : 3355 MFLOPS. This is 2-3 times faster than the Via C7 CN13000 but not quite as fast as the D201GLY2A which has 6172 MIPS and 2687 MFLOPS. Actually the floating point is faster on the new Atom, but the integer operations will be more important for a typical robot application. The Atom consumes 2 watts, while the Celeron consumers 19 watts, so thats excellent power per watt for the Atom! The only problem is that the chipset alone uses 22 watt on the Atom board. Intel really needs to make a low power chipset soon, or at least see that there are embedded developers that dont need a powerful chipset for their system (as in: no display needed). Still, I would recommend this Atom board for anyone thinking of making a robot, it will have more than enough power for your needs. Heavy vision computations should be run remotely anyway imo, since it allows you to be more free in "wasting" cpu power to achieve what you want. A new low power mini itx style board is out, the D945GCLF Intel board with an Atom 230 processor running at 1.6 GHz. The initial reviews can show a benchmark of Dhrystone ALU : 3889 MIPS and Whetstone iSSE3 : 3355 MFLOPS. This is 2-3 times faster than the Via C7 CN13000 but not quite as fast as the D201GLY2A which has 6172 MIPS and 2687 MFLOPS. Actually the floating point is faster on the new Atom, but the integer operations will be more important for a typical robot application. The Atom consumes 2 watts, while the Celeron consumers 19 watts, so thats excellent power per watt for the Atom! The only problem is that the chipset alone uses 22 watt on the Atom board. Intel really needs to make a low power chipset soon, or at least see that there are embedded developers that dont need a powerful chipset for their system (as in: no display needed). Still, I would recommend this Atom board for anyone thinking of making a robot, it will have more than enough power for your needs. Heavy vision computations should be run remotely anyway imo, since it allows you to be more free in "wasting" cpu power to achieve what you want. |

| 27. Aug 2008 My main workstation has been upgraded with a brand new Core2Duo, fast memory and is running Vista in a nice Antec P182 case. I am trying to get the developers environment up and running there again, and so far I have gotten problems with JMF. I hope I can make it work, but I got a bluescreen when it was scanning for capture devices. In time I actually hoped to get rid of JMF because platform independence isnt that important and there is another option that uses DirectShow API natively. Other than that I saw that Cepstral has a few new voices in one of their web services, but unfortunately they arent for sale yet. If you are considering good sounding speech output on your robot, I'd recommend checking out Cepstral (btw I am not paid for saying this :). My new computer seems very powerful and could probably run many RoboRealm vision computations at the same time, so in time I will experiment with a genetic blob and feature detector to see if that could be used as information for the robots navigation module. This will be very complex though. The general idea is to identify blobs and features and make a "mental map" of blob adjacency, and after a while it could use this information to make an estimation about where it is in relation to other things. The trick is to identify which blobs are stationary and which move about. I guess I need to create some statistical data that is continuously updated to make the robot build a more solid map. As I said, this is very complex stuff and chances are I will just experiment with the idea. Autonomous navigation is one of the areas my robot is severely lacking. |

| 3. Apr 2008 I went to Biltema and bought some imitated leather and tried to use that as "skin" on the back of the robot as well as on the top and neck. I am not satisfied with the result, but it will have to do for now. I will possibly use alu-plates on the back as well, but stick with leather on the top and perhaps give the robot a leather cape! :) Sounds silly yes, but I think it might look good actually. |

2. Apr 2008 I have done some research and decided I am going to get the new Intel D201GLY2 Celeron 1.2 GHz Mini-ITX board as the robots next brain. This board is very cheap at $75 and performs 2-3 faster than the old VIA CN13000 I used, but without a great power use increase. Intel recently announced their Atom processors too, which will also be great as a robot brain and probably outperform any Via system also. Its hard to think Via can survice very long with these new products from Intel unless they lower their prices by at least half! The D201GLY2 has 2 USB ports on the back and 4 on headers, which is plenty for my robot. I can probably just fit it right in there where I used to have the Via board before. I probably need to either get the fan version or add a small fan there to keep it cool. I probably have to add some ventilation opening in the robot at some point when it is fully "skinned". I have done some research and decided I am going to get the new Intel D201GLY2 Celeron 1.2 GHz Mini-ITX board as the robots next brain. This board is very cheap at $75 and performs 2-3 faster than the old VIA CN13000 I used, but without a great power use increase. Intel recently announced their Atom processors too, which will also be great as a robot brain and probably outperform any Via system also. Its hard to think Via can survice very long with these new products from Intel unless they lower their prices by at least half! The D201GLY2 has 2 USB ports on the back and 4 on headers, which is plenty for my robot. I can probably just fit it right in there where I used to have the Via board before. I probably need to either get the fan version or add a small fan there to keep it cool. I probably have to add some ventilation opening in the robot at some point when it is fully "skinned". |

| 1. Apr 2008 I have now successfully integrated the USB-Uirt so that the robot can operate the TV through simple voice commands. Turn on/off, change channels, mute, etc. I added some of my tv channel names as grammars too and the robot will select the right channel on the tv. At the moment it doesnt seem to have a long range, but I think I just need to increase the number of repeats when it fires out a command. The next challenge is naturally to make the robot locate the TV and position itself correctly. This is hard since I have not yet made any good mapping and navigation algorithms. At first I will just try to make the robot face the tv based on the magnetic compass (which is a bit flaky atm). |

| 29. Apr 2008 Fixed the head moving algorithm so that it will track any object (COG) with 1 second head move updates. The move speed will be dynamically adjusted so that the head is in its intended position within 1 second. This ensured a smooth head movement when tracking an object (e.g. the users head or a tennis ball). The next challenge is to add a behaviour where if the head is held too long to a side it will rotate the wheels to center the head. |

| 28. Mar 2008 I totally forgot to blog that I have removed the old Via CN13000 board and is now using my Samsung Q1P as the robot brain. The 1 GHz Pentium M performs so much better than the 1.3 GHz Via!!! I have bumped up the fps of my vision code and there is still 50% CPU time left on average! This is good! Considering that I will most probably use a remote server for vision processing, I really dont need any more processing power. I am toying with the idea of taking the Q1P apart and find a way to mount the 7" touch screen on the robots chest. The only problem is to find a reliable way of extending the ribbon cable to the LCD. Other than that, the Q1P has everything it needs except it only has 2 USB ports! So I probably need to install a usb hub and power it from the 12 volt battery (regulated to 5 volts). |

| 26. Mar 2008 I just love Java! Some things are so easy to do here. In a couple of hours I have integrated Wii controller support. You can tell the robot "enable manual control" and it will ask you to click 1 and 2 on your Wii controller to connect it. After that you can use the analog stick on the Nunchuk to drive the robot around! WiiRemoteJ was the library I used and I also found a free bluetooth JSR implementation that works wonderfully. I also added some step based wheel control based on the arrow keys and buttons on the main controller. The plan is to add some sound effects playback when you press certain buttons. That way I can have fun with the kids driving it arond and making sounds. Although I prefer autonomous operation, its was so simple to implement that I just had to add this remote control. Its also nice to have an external control option for triggering certain modes and scripts from the Wii controller as well. |

| 24. Mar 2008 I added a simple "map the area" command and the robot will rotate 360 degrees and use its ultrasonic front sensor to make a sonar readout. I then draw the output on a sonar graph shown on the robot gui. I just wanted to try this out since the sonar will be important for the internal mapping routines that I will be working on next. |

| 20. Mar 2008 I've added a new RoboRealm detection that will detect and follow a tennis ball with its head. I experimented with faster tracking, issuing head movement 3 times a second, but I see that I need to enhance my servo module so that I can set position directly and not use the servo controller registers for moving. If I use those the head will get serveral move commands and frequently move past the goal point, the head whirring back and forth. By lowering the move rate to once every 2 seconds it follows the ball nicely. I also experimented with adjusting the move speed based on the distance so that I could be certain that the head reached its goal position before I sent a new position. I also had to add semaphores so that only one task could use the head servos at a time to avoid conflicting behaviours. I want to further enhance this to rotate the whole robot when the head is turned a lot to the left or right. This will probably be triggered when the head is turned beyond a certain position. And within a threshold I will also add a timer which would turn the whole robot after a while with its head turned to a side. The plan is to make it an inherent feature of how the robot "looks" at things so that all behaviours dont have to worry about this problem. |

| 14. Mar 2008 No, the date is not wrong. A year has actually passed between these blog entries! :) The robot has been sitting on my desk for a long time now. I lost motivation when I faced performance issues on the Via board (hint: get something better). But I have now picked up the project again. My latest discovery is a great vision tool called RoboRealm. This is a really nice program that can act as a vision server which you can send images to for analysis. I immediately wanted to try to realise a fun little thing, and that was to add a grammar where I can ask it "What shape is this" and it will locate the sheet of paper I am holding up with a simple symbol on it. It now recognizes a star, rectangle, square, circle, triangle and circle. And it works brilliantly! I also enhanced my software so that it will connect to a remote RoboRealm server and load robo scripts that are required for a certain vision task. I have a list of things I want to try next. I also fixed a couple of timing issues with the AskQuestionBehaviour so it isnt quite so annoying. :) And I changed the ChatBehaviour so that it has to see a face for it to react to hello's, hi's, etc. This was needed because the robot thought it heard someone say hi all the time. Chances are that I will add grammar timers so that a certain grammar can only be repeated within a certain time span also. Another solution would be for the greeting command to make it turn around and look for a face before it greets back. Naturally this should only happen if the robot isnt doing something important (is in idle/creative mode). |

| 12. Mar 2007 Received the USB-UIRT that I am going to use on the robot so that it can turn on and off the TV, receiver and other IR devices. I will also try using an ordinary remote control (that I am not using otherwise) to send commands to the robot. There is already a nice Java API for the USB-UIRT that I can use for this. |

| 12. Mar 2007 Realized that it was much easier to just attach the ultrasonic sensor to the front panel holes instead of having them mounted on the robot. The Robot-Electronics SRF10 sensor has some nice rubber rings for mounting which was very easy to fasten and it made the whole thing look much better. I also shaped and cut the alu panel on the right side of the robot. I have fastened them using velcro which works better than i had imagined. I used some of the leftover aluminium to make some simple brackets which I fastened to the base using double sided tape and then used velcro on top of that. I also saw that I should try to use as little velcro as possible since it sticks so well that it almost became hard to get the panel off after! :) Well, 3 more alu panels and the robot is looking much better. I have to cut some holes for the power buttons (main and pc) as well as 2 USB ports I'd like to have available on the outside. On the top I plan to add some cloth that is mounted up as the "neck" and it has to be loose enough so that the robot can pan and tilt the head. |

| 11. Mar 2007 Started drilling some holes in the aluminium body front panel for the ultrasonic sensors. The plan was for them to stick out a bit but I think i will ditch that idea and just move them some millimeters in and just have the holes in the body expose the ultrasonic trancedusers. Next I want to get some alu bracets I can attach to the base and then use velcro to fasten the alu body. I think that should hold it in place good enough. The velcro should also minimize any rattling as well. I also fastened the head bracket and have found a way to attach the wires undearneath so that they stick out a bit in the back and have enough flex to move the head without giving the servos too much resistance. I fixed the head behaviour code so that if it doesnt see a face it will move its head in a random fashion with a 20% probability of facing forward. I will probably work more on this and make the head movement more natural. One feature I want to add is motion detection that will trigger head movement, so that when its standing still it will do a motion check and move its head to look at the highest area of the image that had motion. Chances are that it will find a head it can look at after this movement. |

| 10. Mar 2007 Its been a week since I have worked on the robot now. Just wanted a break and do some other stuff. I have worked more on the question Behaviour and found a pattern that can enable and disable the behaviours based on questions and answers as well as a timeout so that it will revert to the standard grammar set when it is times out. I also did some fixes to the free roaming behaviour so that it moves a bit faster, has more responses and is generally a bit better. I have realised that the wheels are just too small, especially the caster wheel will all to often get stuck on the carpet edges. Since I only have one ultrasonic sensor in the front its also not very intelligent in choosing a path for the moment. I have still not added any map-navigation but that will come soon too, hopefully something that can be constructed realtime as it is exploring its area. Chaces are that I need some reference points it can recalibrate itself in some way, preferably through its vision. |

| 3. Mar 2007 This weekend I reworked the Behaviour classes a bit so that they have 4 states, active, disactive, paused, running. This was to enable context behaviours and being able to differ between just a paused behaviour and a completely deactivated one. Furthermore I added categorisation of behaviours so that e.g CRITICAL behaviours are always on, while STANDARD ones can be paused. The Consciousness processor that managed these behaviours has methods for pausing and resuming these. This is the basis I needed to enable conversational behaviours so that the robot disables a number of behaviours while it is having a conversation with you. This also enables temporary behaviours that e.g. can simplify speech input by accepting a subgrammar that uses shorter commands (e.g. "forward" instead of "move wheels forward"). I have realised that my code needs some semaphores for the sound input/output so that the threads dont trip on eachothers feet as the recognizer is disabled and enabled. There has been cases where the hearing processor is active when it should not and where it has not been turned on after some voice output. These cases has been further complicated with the enabling and disabling of grammars. |

| 2. Mar 2007 Ok, I have now created a new subdomain at http://robot.lonningdal.net (where you are reading this). Hoping that these pages will provide som interest to people who are also building general purpose PC robots. Some day I plan to add some blueprints and bill of materials on my design so that you can create a JCP1 too if you want. :) - I am not sure when or if I will be ready to give out the code freely. I might even consider writing a book about it and let everyone see the solution there. |

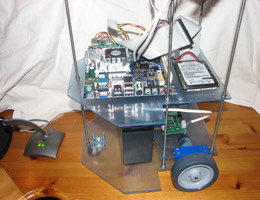

1. Mar 2007 Finally I tried bending the aluminium sheets to fit the front of the robot with some success. Its quite easy to bend 0.5 mm thick sheets and they can be cut with a normal scissor. Although I wish I could get better bendings, the result was quite alright. I think the robot will look cool with the aluminium plates all around it. For the top I am planning to use some black leather-look alike material that can be sewn to form a turtle neck to hide the servos while still being flexible enough for the neck to turn and tilt. As you can see in the picture the aluminium plates already got some scratches so handle them with care if you want a clean finish. And you can also see that the plate is not fixed in anyway, but resting on a couple of duplo lego bricks! :) The plan is to add some L-brackets to each level and then use velcro (with adhesive back) to hold the body plates in place. If the plates start to make rattling sounds when the robot is moving I will probably add some soft lining to the edges of each level, preferably some thin rubber. Finally I tried bending the aluminium sheets to fit the front of the robot with some success. Its quite easy to bend 0.5 mm thick sheets and they can be cut with a normal scissor. Although I wish I could get better bendings, the result was quite alright. I think the robot will look cool with the aluminium plates all around it. For the top I am planning to use some black leather-look alike material that can be sewn to form a turtle neck to hide the servos while still being flexible enough for the neck to turn and tilt. As you can see in the picture the aluminium plates already got some scratches so handle them with care if you want a clean finish. And you can also see that the plate is not fixed in anyway, but resting on a couple of duplo lego bricks! :) The plan is to add some L-brackets to each level and then use velcro (with adhesive back) to hold the body plates in place. If the plates start to make rattling sounds when the robot is moving I will probably add some soft lining to the edges of each level, preferably some thin rubber. |

1. Mar 2007 After much software work I thought it was about time I did some updates to the base. I adjusted the layers so that a 40cm aluminium sheet would be high enough to fit the front with an angle at the chest. I then decided the final layer layout and sawed off the the threaded rods sticking out on the top. I then dismounted the camera and fixed that onto the speaker as well as attaching the speaker to the servo pan/tilt instead. So now the head is getting a bit better with the speaker embedded. For the future its tempting to dismantle it all and build a custom head and fit the camera and speakers in that. I also drilled a hole behind the "neck" for the USB and servo cables. I see that the weight of the USB cables can be a problem and might cause the servos to struggle a bit so I need to figure out the best way to hang these. This tidied everything up a bit with all USB cables inside the body. After much software work I thought it was about time I did some updates to the base. I adjusted the layers so that a 40cm aluminium sheet would be high enough to fit the front with an angle at the chest. I then decided the final layer layout and sawed off the the threaded rods sticking out on the top. I then dismounted the camera and fixed that onto the speaker as well as attaching the speaker to the servo pan/tilt instead. So now the head is getting a bit better with the speaker embedded. For the future its tempting to dismantle it all and build a custom head and fit the camera and speakers in that. I also drilled a hole behind the "neck" for the USB and servo cables. I see that the weight of the USB cables can be a problem and might cause the servos to struggle a bit so I need to figure out the best way to hang these. This tidied everything up a bit with all USB cables inside the body. |

1. Mar 2007 Power, power, power. As my previous blog entry states, power usage is one of the major concerns since its no fun to have a robot that can be around for 2 hours and then has to park itself for 8 hours until you can use it again! Power use was the main reason why I bought the Epia Via C7 processor also - but in spite of all the marketing, the mini-itx boards arent that power efficient - really! If you look at the power simulator screenshot, you will see that the robot draws almost 29 watts in total using office applications. I chose this since its the highest value you get from the simulator - remember that a robots CPU is probably overloaded all the time depending on how much vision calculation has to be done on it. 29 watts! Thats quite a lot - my 10 Ah battery would run for 3.44 hours! I do not have an accurate estimate of the battery lifetime on the robot right now but it seems to be around 3-4 hours and thats without it running about much either. So get bigger batteries you say! Well, weight is my main concern here - the alternative is really to get more amps per kg, which means NiMh or Lithium Ion - both quite expensive solutions and some solutions might not deliver the amps needed for it all (if both my motors stall they can draw 5 amps together!). So whats the alternative then? Well, it seems that the brain (motherboard) is the main continous power draw in a robot of this type, so the solution would simply be to swap it out - but with what? Well the only real alternative is to use a small laptop or a umpc. The Sony Vaio UX 180p springs to mind, and would be an excellent brain for a robot - with a nice touch screen as a bonus. These devices really dont draw much power. My UMPC, a Samsung Q1 1 Ghz Pentium-M uses typically around 10-11 watts full load with screen and wireless on. Thats very, very efficient and beats the nickers out of any Epia Via C7 mini-itx system as well as providing twice the CPU power! Again if I could afford it, I'd get a UX180p any time for a project like this... sigh! Consider the run time I'd have with that coupled with a 2kg 200 Whr Battery Geek Lithium Ion battery!... but at an insane price! :) Power, power, power. As my previous blog entry states, power usage is one of the major concerns since its no fun to have a robot that can be around for 2 hours and then has to park itself for 8 hours until you can use it again! Power use was the main reason why I bought the Epia Via C7 processor also - but in spite of all the marketing, the mini-itx boards arent that power efficient - really! If you look at the power simulator screenshot, you will see that the robot draws almost 29 watts in total using office applications. I chose this since its the highest value you get from the simulator - remember that a robots CPU is probably overloaded all the time depending on how much vision calculation has to be done on it. 29 watts! Thats quite a lot - my 10 Ah battery would run for 3.44 hours! I do not have an accurate estimate of the battery lifetime on the robot right now but it seems to be around 3-4 hours and thats without it running about much either. So get bigger batteries you say! Well, weight is my main concern here - the alternative is really to get more amps per kg, which means NiMh or Lithium Ion - both quite expensive solutions and some solutions might not deliver the amps needed for it all (if both my motors stall they can draw 5 amps together!). So whats the alternative then? Well, it seems that the brain (motherboard) is the main continous power draw in a robot of this type, so the solution would simply be to swap it out - but with what? Well the only real alternative is to use a small laptop or a umpc. The Sony Vaio UX 180p springs to mind, and would be an excellent brain for a robot - with a nice touch screen as a bonus. These devices really dont draw much power. My UMPC, a Samsung Q1 1 Ghz Pentium-M uses typically around 10-11 watts full load with screen and wireless on. Thats very, very efficient and beats the nickers out of any Epia Via C7 mini-itx system as well as providing twice the CPU power! Again if I could afford it, I'd get a UX180p any time for a project like this... sigh! Consider the run time I'd have with that coupled with a 2kg 200 Whr Battery Geek Lithium Ion battery!... but at an insane price! :) |

28. Feb 2007 Got the Switching Regulator from Dimension Engineering in the mail so I removed the battery pack and wired this up and adjusted it to give out 6 volts for the servos. Everything worked fine! Good to get rid of that battery pack since it simplifies recharging the robot a lot. About batteries, I posted this on the OAP mailing list also: I think one should strive for a solution that will power the robot for at least 8 hours. Of course you can add some heavy duty batteries but that again also requires more powerful motors. The RD01 kit from Robot Electronics (Devantech) that I am using isnt really built to carry a large battery and the 10 Ah SLA battery I use today already weights 3,3 kg! Its almost tempting to get a large NiMh battery pack instead - except the cost is almost 6 times higher for one at around 10 Ah. The advantage is that it weights half of the SLA battery, so I can actually have a 20 Ah pack on my robot and still keep it at the 8 kgs I have as the goal! I have found a company that sells 12 volt NiMh battery packs all the way up to 50 Ah using simple D-cells - so it is possible, but you need a hefty recharging solution for this. Another concern is battery recharge times, a typical 10 Ah SLA battery should preferably only be charged with low amps. My CTEK XS 3600 charger uses 0.8 Ah for batteries lower than 14 Ah and will use 10 hours to recharge the battery! If it had been e.g a 20 amps battery it could be charged at 3.6 amps which would only take 5 hours. A 20 Ah SLA battery will truly blow my weight budget completely and I'd have to get more powerful motors. Maybe some ideas to bring when I start JCP2 sometime in the future! :) Got the Switching Regulator from Dimension Engineering in the mail so I removed the battery pack and wired this up and adjusted it to give out 6 volts for the servos. Everything worked fine! Good to get rid of that battery pack since it simplifies recharging the robot a lot. About batteries, I posted this on the OAP mailing list also: I think one should strive for a solution that will power the robot for at least 8 hours. Of course you can add some heavy duty batteries but that again also requires more powerful motors. The RD01 kit from Robot Electronics (Devantech) that I am using isnt really built to carry a large battery and the 10 Ah SLA battery I use today already weights 3,3 kg! Its almost tempting to get a large NiMh battery pack instead - except the cost is almost 6 times higher for one at around 10 Ah. The advantage is that it weights half of the SLA battery, so I can actually have a 20 Ah pack on my robot and still keep it at the 8 kgs I have as the goal! I have found a company that sells 12 volt NiMh battery packs all the way up to 50 Ah using simple D-cells - so it is possible, but you need a hefty recharging solution for this. Another concern is battery recharge times, a typical 10 Ah SLA battery should preferably only be charged with low amps. My CTEK XS 3600 charger uses 0.8 Ah for batteries lower than 14 Ah and will use 10 hours to recharge the battery! If it had been e.g a 20 amps battery it could be charged at 3.6 amps which would only take 5 hours. A 20 Ah SLA battery will truly blow my weight budget completely and I'd have to get more powerful motors. Maybe some ideas to bring when I start JCP2 sometime in the future! :) |

| 27. Feb 2007 I started work on what I call behaviour trees which is a system that will dynamically alter the currently active behaviours. So if a certain behaviour is triggered it can enable some new behaviours and disable others. This is the foundation I need to be able to create conversations, and to begin with I did a simple behaviour that if it has seen a face for 10 seconds it will ask if it can ask you a question. The new behaviour now is that you can answer it yes or no (and variations of this). I am also going to add timers to behaviours so that certain behaviours are only active for a certain amount of time. For example the quesion behaviour will only be active for 10 seconds until it goes back to normal operation (or previous state). Its becoming an interesting state driven engine now. I also added global timer triggers to the Counsciousness module so that I have one single thread firing events to different modules instead of each behaviour module having to run its own thread. |

| 26. Feb 2007 Did some modifications to the roaming behaviour so its more robust and navigates a bit more intelligently. I realised that it has problems getting over the carpet corners if it approaches at an angle. This is because the caster wheel is flat and made of plastic. I think I want to swap this with an oval rubber caster instead to make it better. The caster rotation hinge is terrible also with way too much friction - I had to use some CRC on it to get it to rotate the way it should. |

| 25. Feb 2007 Added code for long term memory, a database system where you can store any kind of information on a tag and add relations between tags. I then used this to store the creation time of the JAR file containing the robot code so that it will look at this every time it starts up and if it has been updated the robot will utter words of happiness about its software update. Its quite fun! :) |

| 24. Feb 2007 Havent done much this weekend except that I added a behaviour where you can ask it about currencies. It will download the latest currency rates off the net automatically. Later I am going to add the ability to convert currencies as well. Its quite useful since I often purchase things from USA and would like to know how the dollar is compared to the Norwegian Crown. I also added a roaming behaviour where it will just go around randomly exploring the room. |

| 20. Feb 2007 Some minor software modifications as well as the ability for the robot to play back wav files. I use this for it to play back sound effects so I can make it laugh, snore or make animal sounds. Its a fun feature which I already added some behaviour code to interact with it. My daughter will love it for sure! :) |

| 19. Feb 2007 Since the robot now has access to the internet, I quickly made a behaviour where you can ask it about the current weather as well as a forecast for the next day. This data is retreived from a Yahoo Weather RSS feed, parsed and uttered by the robot. It works brilliantly and I definitely plan to add more useful features that uses the internet. Among these a pop3 mail checker, an rss news reader and a currency converter. In time I might explore the possibility for the robot to look up articles on wikipedia which it can read for you. This requires me to use a dictation mode for the words to look up and will probably not be very precise. Still its an interesting concept I want to explore. |

| 19. Feb 2007 I finally bought a wireless card that I found on a special sale. Its a 54 Mbit/s PCI wireless card, which is more than enough for my planned use. Installing a new version of my software on it is a breeze now since the robots drive is shared on the net I can now one click pack the JAR file and deploy it immediately. I also installed VNC and can remotely control and see the user interface of the robot. Fortunately it doesnt seem to eat much CPU time either. Connecting with VNC I was also able to figure out what happened when you boot the Via Epia board in headless mode. It was always reverting back to 640x480 resolution, which again caused my GUI layout manager to collapse in a way that made text fields so small that they got stuck in some layout repaints that quickly gobbled up 100% of the CPU time. A quick fix to solve this resulted in a much smoother running robot with average CPU load at around 50% with face detection being run once every 3 seconds. The camera stream is still what consumes the most CPU time, and I really want to explore the possibilities here to lower the CPU use from this part of the software. I really only need one picture per second for the robot to work satisfactory so its a shame my code has to retrieve a stream of image data that it has to ditch. For my Logitech camera, the framerate adjustment doensnt work either. |

18. Feb 2007 I have revamped the web pages a little bit adding a title with links to all pages so everything here is reachable from everywhere. Also added a "What can it do?" page where you can eventually read all the things the robot can do so far. Here is also a screenshot of the user interface (without compass readings update). This user interface is of course only accessible if you connect a screen to it or use vnc or remote desktop. So far I dont have a wireless network card, but this is to be added very soon. I have revamped the web pages a little bit adding a title with links to all pages so everything here is reachable from everywhere. Also added a "What can it do?" page where you can eventually read all the things the robot can do so far. Here is also a screenshot of the user interface (without compass readings update). This user interface is of course only accessible if you connect a screen to it or use vnc or remote desktop. So far I dont have a wireless network card, but this is to be added very soon. |

18. Feb 2007 This weekend I did a number of changes, the most notable one was to change the camera from the cheap Creative Live to my good old Logitech Quickcam Pro 4000. This made the picture much clearer, and the robot seems to use less CPU power from the cameastream also during some tests (more about this later). The Logitech camera also has an integrated microphone which seems to work fine for speech recognition also. This saves me one more device and leaves me with one free USB port on the motherboard. I also tried interfacing the CMPS03 digital compass and although I was able to read values from it I was unable to make any precise data from it. I tried calibrating it but the best results I have so far only seem to give me good values for 3 sky directions and terrible values for the fourth. I also have to be careful with where I mount the compass since its values was totally off when it was some 12 cm from the speakers on the robot. Chances are that I have to mount it on the bottom level behind the battery, hopging that the motors wont ruin the readings totally. The software on the robot was updated and I added some more commands as well as tuning some of the response. The battery level measurement from the motor controller seems off by 0.3 volts so I just added that in software which seems to give me more accurate information about when it needs to be recharged. I have also found a nice company selling switching regulators ideal to regulate 12 volt to 6 volt for the servos. This weekend I did a number of changes, the most notable one was to change the camera from the cheap Creative Live to my good old Logitech Quickcam Pro 4000. This made the picture much clearer, and the robot seems to use less CPU power from the cameastream also during some tests (more about this later). The Logitech camera also has an integrated microphone which seems to work fine for speech recognition also. This saves me one more device and leaves me with one free USB port on the motherboard. I also tried interfacing the CMPS03 digital compass and although I was able to read values from it I was unable to make any precise data from it. I tried calibrating it but the best results I have so far only seem to give me good values for 3 sky directions and terrible values for the fourth. I also have to be careful with where I mount the compass since its values was totally off when it was some 12 cm from the speakers on the robot. Chances are that I have to mount it on the bottom level behind the battery, hopging that the motors wont ruin the readings totally. The software on the robot was updated and I added some more commands as well as tuning some of the response. The battery level measurement from the motor controller seems off by 0.3 volts so I just added that in software which seems to give me more accurate information about when it needs to be recharged. I have also found a nice company selling switching regulators ideal to regulate 12 volt to 6 volt for the servos. |

| 13. Feb 2007 I have done a number of software changes and additions. Among these, the robot will now pause the speech recognizer whenever it is talking to avoid having the mic picking up its own words as commands. This worked fine and the robot became a bit more "quiet". :) I also added some code to move the heads tilt/pan servos depending on where the largest face in its vision is. This worked somewhat, but I have realized that the Creative Live Vista web camera I have is terrible in low light conditions, so I definitely have to change this. Chances are that I will swap it with my desktop computers Logitech Pro 4000 camera which seem to work much better in lamp-light. That camera also has an integrated mic which seems to work ok for voice recognition also. I also added some more conversational commands like you can ask it what the time is and how it is feeling. If its battery is very low it will say that it doesnt feel too well, that it needs more power! :) A grammar for asking about cpu load and sensor readouts was also added. Its nice to have these there because I dont have a screen on this and all debug information has to come through its voice now. I desperately need a wireless card in this now for debugging as well as a simpler way to install new software. The microphone still picks up servo sounds of course, and I am thinking about trying to time the head movements to periods when the robot is talking (hence not listening), but that might be too limiting. I might consider trying to build the head in such a way that it dampens out most of the servo motor sounds. The next thing I want to do with the robot now is to interface the digital compass module. |

| 11. Feb 2007 This weekend I assembled it all except the compass module. For the first time I also ran the Mini-ITX on battery power which worked flawlessly. I have updated my code to support it all and reworked my behaviour code so that its more object oriented. The robot can now run by itself using simple commands. I had to deactivate the speech recognition everytime the robot spoke or it would very easily hear its own words as commands! :) For the moment the robot is very talkative and has some random sentences as well as some famous quotes. It will also talk about its battery state once in a while, but more frequently when the battery is getting low. Furthermore it will react to its ultrasonic sensor when something is closer than 20 cm. If its moving forward it will stop the motors, otherwise it will just say that something is very close to it. The robot will also greet when it sees a face after a period of not seeing a face. The only thing the robot is missing now is a wireless network adapter. Its very tedious to get the latest version of my code onto it now - I have to transfer it using a usb stick. Also the Creative webcamera drivers seems to have a bug since I am unable to disconnect from it without the whole Java VM hanging. This ruins my auto-update plans for the software also. I was hoping to add a function that checks for an updated version of the sw and auto-downloads it. The general idea is to have a seemless software upgrade that dont require me to have a screen/keyboard/mouse connected to it. |

| 9. Feb 2007 Checked out the new Creative Live! Cam Voice web camera in a store. It has an integrated array mic which should make it better for speech recognition and probably a bit more directional. It looks pretty cool and should probably work fine except that the Creative Live Vista cam I am using now seems to hang when you close the data stream. Chances are that I will use a webcamera with an integrated mic to reduce the number of devices and avoid having a separate microphone. |

| 8. Feb 2007 The Jameco package contained all I need to wire everything up now except that I forgot to order the power plug thingy to connect to the picoPSU. I think I managed to find something like that on Clas Ohlsson today and will try to use that. I also picked up a soldering iron kit so that I can add the connector to the sensor and other soldering needs which will no doubt spring up. |

| 8. Feb 2007 The USB speakers have decent sound and came with some drives I also tried. They had some environmental sound effects features which I thought I could use to make the robot sound a bit cool, but I soon realised that this was all implemented in the driver software and was not a feature of the speakers. This basically meant that the CPU load jumped to 100% everytime it spoke and even made the sound break in a stutter. This was swiftly uninstalled! :) |

| 8. Feb 2007 Eager to get the OpenCV face recognition up and running I made a behaviour module that looks for a face every 10 seconds and will say a greeting or something when it does. Its very fun and worked immediately. I will add some more parameters to this so that it only triggers on faces that are close to it and only greets if it hasnt seen a face for some time. This is all very simple to do in software. |

| 8. Feb 2007 The package from Jameco arrived, and I bought some small Trust USB speakers which work brilliantly for the robot. The funny thing is that they look like eyes so the immediate thought was to mount them on the head as if they were eyes, although there would be a camera there too over or under it. :) The servo brackets from Lynxmotion and their Pan/Tilt kit is excellent and I mounted it all on the top, and even managed to fit the webcamera on the tilt bracket very easily. In time I will of course dissasemble this and create frame for the head and mount it all there instead. |

| 7. Feb 2007 After much trial and error I managed to get my OpenCV Java-JNI C code to compile in Visual Studio Express and soon after I was able to create my Java integration to OpenCV for face recognition. My API has methods for sending down a 24 bit BGR style image which is copied into a temporary OpenCV image kept in the native implementation. The next improvement will be to allow it to maintain several cascades so that you can have it recognize different types of objects. I just have to learn how to create new training sets - which might be more difficult than I hoped. |

1. Feb 2007 I'll make this a separate posting because its a small breakthrough so far and was achieved yesterday. I was able to voice activate the robot for the first time. You can now tell it orders to move forward, backward, turn left or right as well as stopping. I coded in support for a grammar instead of using dictation which I did before. It also seems much more efficient performance wise. The system even accepts my wifes voice out of the box now so she can order it as well. The GUI frontend has been improved somewhat to give better feedback as well as being able to enable and disable each module. I'll make this a separate posting because its a small breakthrough so far and was achieved yesterday. I was able to voice activate the robot for the first time. You can now tell it orders to move forward, backward, turn left or right as well as stopping. I coded in support for a grammar instead of using dictation which I did before. It also seems much more efficient performance wise. The system even accepts my wifes voice out of the box now so she can order it as well. The GUI frontend has been improved somewhat to give better feedback as well as being able to enable and disable each module. |

1. Feb 2007 Its been a while since last report and steps are being made. I have ordered wires, crimps, and molex plugs from Jameco. I also ordered a pan/tilt kit for the head, a solar cell sheet just for fun and hopefully to charge the 6 volt battery pack for the servos. The base has been refined and the wheels, motor, motherboard and harddrive has been mounted. The battery also has a place although not secured yet. I have wired up the battery to the motor and made a makeshift cable to hook up the I2C-USB adapter to the motor controller. The software has also been updated and I have made a general purpose I2C Java driver that enables me to communicate with all I2C devices. Its been a while since last report and steps are being made. I have ordered wires, crimps, and molex plugs from Jameco. I also ordered a pan/tilt kit for the head, a solar cell sheet just for fun and hopefully to charge the 6 volt battery pack for the servos. The base has been refined and the wheels, motor, motherboard and harddrive has been mounted. The battery also has a place although not secured yet. I have wired up the battery to the motor and made a makeshift cable to hook up the I2C-USB adapter to the motor controller. The software has also been updated and I have made a general purpose I2C Java driver that enables me to communicate with all I2C devices. |

| 19. Jan 2007 First version of the web pages up and running. Hoping to move it all to robot.lonningdal.net some day soon. |

| 18. Jan 2007 Some software testing shows that the Via CN13000g doesnt have enough horsepower to run MS Speech Recognition, easily eats up all CPU time! :( Need to distribute the processing through wireless networking to make it work like I want. Also made my first JNI bridge between a VC++ compiled DLL and Java. Will work further on this to interface OpenCV with Java. |

| 17. Jan 2007 Via system up and running with Windows XP and all necessary drivers and APIs installed. All works fine. Worked some on the robot software to make a GUI frontend to visualise information about each module (vision, hearing, speech). |

| 16. Jan 2007 Received package with Via Epia CN13000g mini-itx board, memory, webcamera and a case. Looks very nice and small! But World of Warcraft Burning Crusade was also purchased so I just had to play that a little! :) |

| 15. jan 2007 Got the PicoPSU in the mail. Its really really small and will be great for my robot. |

| Summer 2005 Made the first sketches and 3D Studio models and renderings. Also prototyped CloudGarden JSAPI. Researched the ned and found out about OAP. I had a lot of ideas and wrote down a lot, but didnt take the time to start on the project. It would be some time until I did... |